Outils pour utilisateurs

Panneau latéral

Table des matières

Projects

The aim of the ChaNTeR project is to build and test the use of high quality singing voice synthesizers, with the pronunciation of lyrics. Two different modes are targeted :

- The « Text-To-Singing » mode , for which the user must enter the lyrics and notes of the score which are then used to produce a singing voice, offline.

- The « Singing instrument » mode, for which the user uses real-time control interfaces to control the singing voice synthesizer as an instrument.

Text-To-Singing

The aim is to build a singing-voice synthesizer using a score and some lyrics as inputs. Concatenative synthesis has been chosen as a mean to produce high-quality synthesis.

Database creation

As a first step, a textual corpus has been written, that covers all diphones of the French language. Acapela tested various approaches to cover those units with French texts, and we obtained, using a greedy coverage algorithm, a list of 770 words to record. To validate the approach, some primary tests were run by synthesizing short extracts of popular songs in various styles (pop, classical, etc.), using a reduced version of the corpus recorded by two singers (Marlène Schaff and Raphaël Treiner). This allowed us to adjust the procedure and the corpus, before recording two full databases with the same singers. A third database was also recorded with a classicaly-trained singer, Eleonore Lemaire. Each of those voices led to about 900 audio files, which were automatically segmented into phonemes (with some manual correction).

Concatenative Text-To-Singing synthesis

A first singing synthesizer has been built using those recordings, based on diphones concatenation, with the SuperVP engine for transforming the samples. Alternative singing engines based on the aHM and PaReSy models have been also implemented in the Text-To-Singing system. A first model of automatic f0 Curve generation has been developed, allowing to reproduce variations specific to various singing styles, along with an algorithm for transforming the intensity (and other timbral aspects).

Singing Instruments

A singing instrument allows to control a voice synthesized in real time. It is thus composed of a singing synthesis engine, and a control interface that links the gestures of a musician to the synthesizer’s parameters. The two main goals in the conception of a singing instrument are:

- First, to conceive an interface allowing for rich and various musical possibilities, associating sound identity with fine controls, and expressivity, while keeping enough potential for exploration.

- Then, to develop a singing synthesis engine functioning in real-time, i.e. producing sound reactively in response to the musician gestures.

Studied interfaces

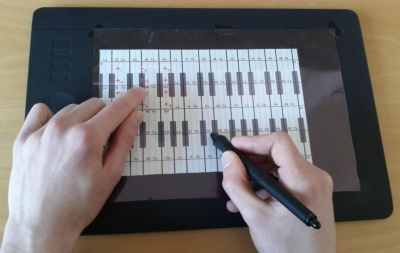

Graphic tablet

The appeal for this tool may be justified by several reasons. First, from a technological point of view, the graphic tablet is a tool providing multiple parameters with high spatial and temporal resolutions. The Wacom Intuos 5M tablets have a 0.005 mm resolution and 2048 pressure levels, and the temporal resolution of those tablets is 5 ms with a pen (stylus), and 20 ms with the fingers. Those high resolutions create the illusion of a direct causality between sound and gesture, like for an acoustical music instrument.

Then, the less the parameters are static, the more the sound may be realistic. As a consequence, the interface should suggest precise, reproducible, intuitive and dynamical gestures. The stylus, initially designed for drawing, fulfills the criteria by offering a well-known kind of gesture, practiced since childhood: handwriting. Compared to the mouse or trackpad, the tablet offers the possibility to play with subtle modifications of voice parameters, essential to the quality of sound. Touch technology benefits from massive usage ability of ones finger with tablets and smartphones.

The Dualo Du-touch

More information here: http://dualo.org/le-principe-dualo/

Developed instruments

At this stage, two different singing instruments have been developed: the Cantor Digitalis and Calliphony. Those two softwares use a graphic tablet as a control interface. The pitch and vocal effort are controlled with a stylus held by the preferred hand, and the articulation of the vowels and/or consonants is driven with the other hand. Both instruments use their own synthesis engine.

Cantor Digitalis

Cantor Digitalis uses a parametric vocal synthesizer, producing a fully artificial sound whose properties can be modified with a set of parameters. It implements the linear source-filter model of voice production. The source, i.e., the vocal folds vibration, is computed using the Causal-Anticausal Linear Model (CALM). The source parameters are combined for controlling the pitch and 4 vocal dimensions: voice tension, roughness, and vocal effort. The filter part, i.e., the influence of oral and nasal tracts on the source, is calculated using a parallel structure of 2nd order resonators called “formants”. The filter parameters are combined to control the articulation of French vowels.

All source parameters (pitch and voice quality) and filter parameters (articulation) are continuously controllable with the graphic tablet, or using a mouse on a dedicated interface. The stylus on the tablet controls the source parameters: its position on the horizontal axis defines the pitch, and its pressure is linked to the vocal effort. The position of a finger in a specific area of the tablet allows to control the articulation. The position of notes and vowels through a vocalic triangle representation are displayed on the graphic tablet. The musician can control the note with precision and expressiveness by sliding the stylus on the tablet’s surface. He can also articulate between the different vowels in a natural way by sliding his finger inside the vocalic triangle.

/*La méthode de synthèse qui y est utilisée est dite “par règles”. 5 filtres passe-bande modélisent les 5 premiers formants d'un signal de voix chantée. Leur gain, leur fréquence de coupure et leur largeur de bande sont fixés pour toutes les voyelles orales du Français. Ces paramètres sont ensuite interpolés lors de transitions voyelle-voyelle afin de produire une articulation convaincante.*/

For more informations, see : https://cantordigitalis.limsi.fr/

Calliphony

Calliphony is a system for modifying pre-recorded speech signals. It offers the possibility to control in real-time the pitch and rhythm of the recorded signal. As for the Cantor Digitalis, notes and vocal effort are controlled using a stylus in one hand. The question of pitch control have already been largely explored during previous work on the Cantor Digitalis, and the main question that arise here is : How to control the rhythm of a speaking or singing voice?

In the literature, a syllable is composed of 3 parts : the attack, the vocal kernel, and the coda. But for the real-time control of rhythm, this decomposition principle must be neglected in favor of the « rhythmic phases ». Two types of rhythmic phases are defined for French: the vocalic phase, corresponding to vowels, and the consonantal phase, corresponding to consonants. Then, a first approach for control is to link the vocalic phase to a pressed key, and the consonantal phase to a released key: the musician will trigger a vowel by pressing the control key and a consonant when releasing it. In a musical context, this type of control can sometimes be inconvenient, as it doesn’t allow any flexibility on the duration of the transitions between vowels and consonants. The precision of the control of the transitions, and thus rhythm, can be improved by replacing the key, which is a binary interface, by a continuous interface, like a fader.

This system will also allow the real-time control of a concatenative Text-To-Singing system developed in this project.